Happy Sunday dispatchers!

Something interesting happened when China's hottest AI suddenly went dark to the world. While tech giants scrambled and markets panicked, one man saw an opportunity.

Erik Voorhees, the same maverick who once built a Bitcoin gambling site and later a billion-dollar crypto exchange, ShapeShift, quietly opened a backdoor.

Within hours of his new project's launch, it hit a billion-dollar valuation.

Within days, it became both the talk of Silicon Valley and the centre of a brewing scandal.

This story is about what happens when the unstoppable force of artificial intelligence meets the immovable object of crypto's anti-censorship ethos.

The result? Well, that's what we're here to unpack.

In today's deep dive, we'll look behind the curtain of crypto's most ambitious AI project yet. We'll explore why some are calling it the future of AI, while others see it as a dangerous experiment in removing AI safeguards.

And maybe, just maybe, we'll figure out if this is the beginning of tech's next great war.

Buckle up – this rabbit hole goes deep.

Buy Once, Earn Daily Bitcoin Forever

Infinity Hash brings the best aspects of cloud and colocation mining into a transparent and reliable system that provides long-term cash flow.

The ShapeShift of AI

When Erik Voorhees announced Venice AI's token launch on Base, most people missed the irony. The man who once built ShapeShift to free crypto from centralised exchanges was now attempting something even more ambitious: liberating AI from centralised control.

Let's back up.

Venice AI, launched in May 2024, isn't just another AI chatbot. Then?

It's an entire platform built on a radical premise: what if you could access the world's most powerful AI models without surveillance, without censorship, and without leaving a trace?

The numbers suggest Voorhees might be onto something.

Since launch, Venice has attracted 400,000 registered users, handles 15,000 inference requests per hour, and maintains 50,000 daily active users.

What makes Venice truly different is its approach to AI access.

Unlike ChatGPT or Claude, which run on their own proprietary models, Venice acts more like a privacy-preserving gateway to multiple AI models.

Want to use Meta's LLaMA? No problem.

Prefer the newly restricted DeepSeek? Venice has you covered.

It's this last point that proved particularly prescient.

When DeepSeek, China's rising AI star, suddenly restricted access to Chinese phone numbers only, Venice became one of the few remaining gateways to what many consider the most advanced open-source AI model.

The timing couldn't have been better – or more suspicious, depending on who you ask.

Before we dive into the controversy that followed, we need to understand exactly how this "uncensored AI" actually works.

Because as with most things in crypto, the devil is in the technical details.

To get full access to our weekly premium features (HashedIn, Wormhole, Rabbit hole and Mempool) and subscribers only posts.

Under the Hood

If Venice AI's promise sounds too good to be true – private, unrestricted access to top AI models without surveillance – that's because making it work required some clever engineering.

The secret sauce? A combination of blockchain technology, decentralised GPU networks, and what Venice calls "abliterated models."

Let's break it down.

First, there's the privacy layer.

Unlike traditional AI platforms that store every conversation on their servers, Venice claims to keep nothing. Your chats stay local, encrypted on your device.

When you switch browsers or devices, your history starts fresh – because there is no central database keeping track.

This raises an obvious question: if everything's local, how does the AI processing actually happen?

Venice routes your queries through a decentralised network of GPU providers.

Each node only sees the immediate request, without any context about who you are or what you've asked before. It's like having a different person handle each sentence of your conversation, with no one seeing the full picture.

Then there's the "abliteration" process – Venice's approach to censorship resistance. They take open-source AI models like LLaMA or DeepSeek and modify them to remove built-in restrictions.

Here's where critics have raised valid concerns: if you're removing AI safeguards, what prevents misuse?

Venice's answer is a tiered system.

Free tier: Basic safeguards remain

Private tier: More flexibility, with 20 daily prompts

Pro tier ($18/month): Full access to unrestricted models

The platform offers access to an impressive array of models.

OpenAI's GPT variations

Anthropic's Claude

Meta's LLaMA

The controversial DeepSeek

Various specialised coding models

Each model can be selected based on your specific needs – a luxury not offered by most AI platforms.

Perhaps the most innovative aspect is how Venice handles the economics of AI computing.

This is where the VVV token comes in, and where things get really interesting.

The Billion-Dollar Question

When Venice AI launched its VVV token on January 28, 2025, it did something unusual in crypto: it skipped the presale.

Instead, 50% of the total 100 million tokens went straight to the community through an airdrop.

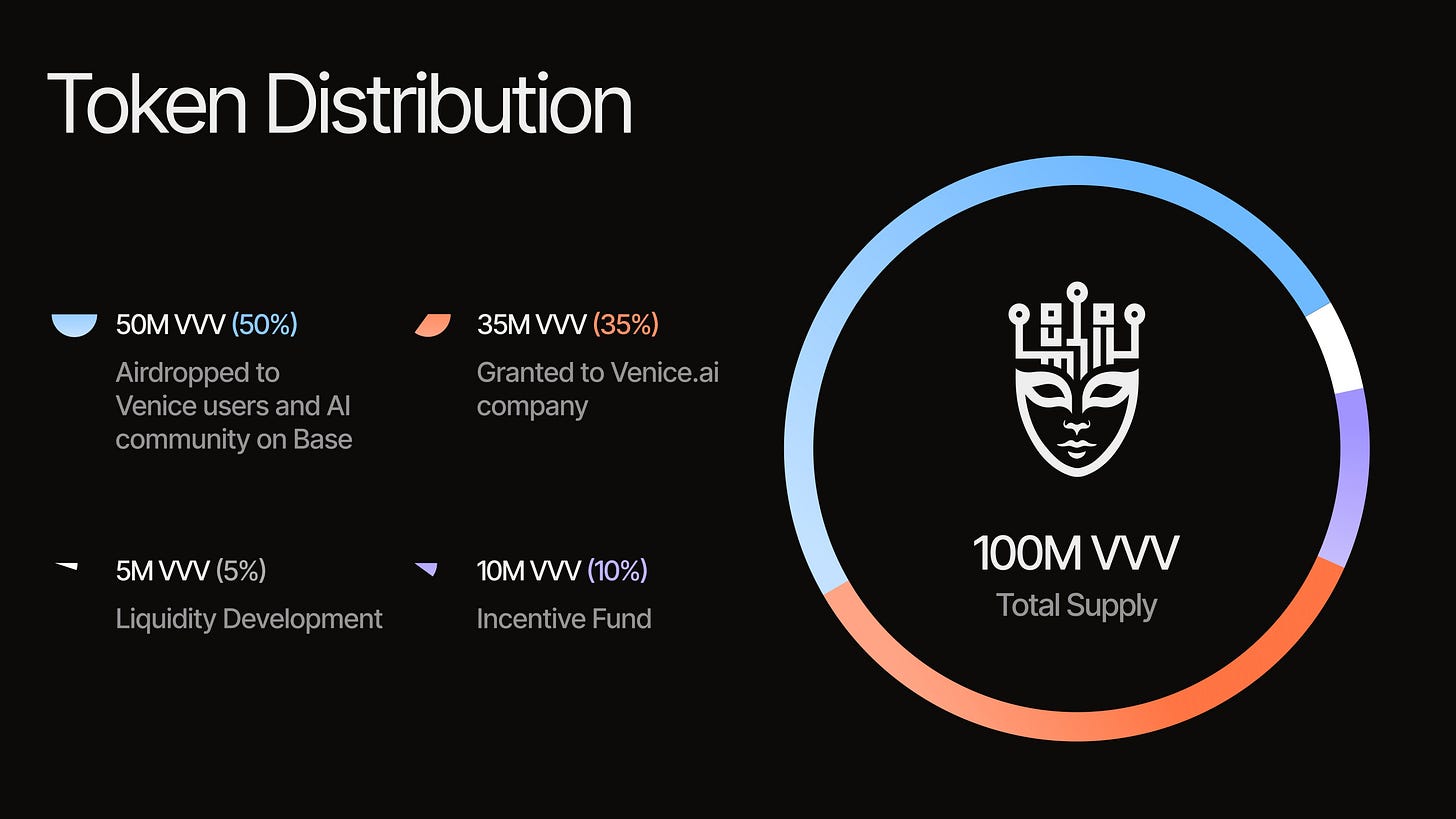

The distribution looked clean on paper.

50% to community (airdrop)

35% to Venice company (partially vested)

10% to Venice Incentive Fund

5% for liquidity development

It's what happened next that got everyone's attention.

The VVV token took just 90 minutes to hit a fully diluted value of $1 billion after its January 27 launch, before pushing even higher to $1.65 billion.

With only a quarter of its 100 million total tokens in circulation, the actual market cap settled at a still-impressive $383 million.

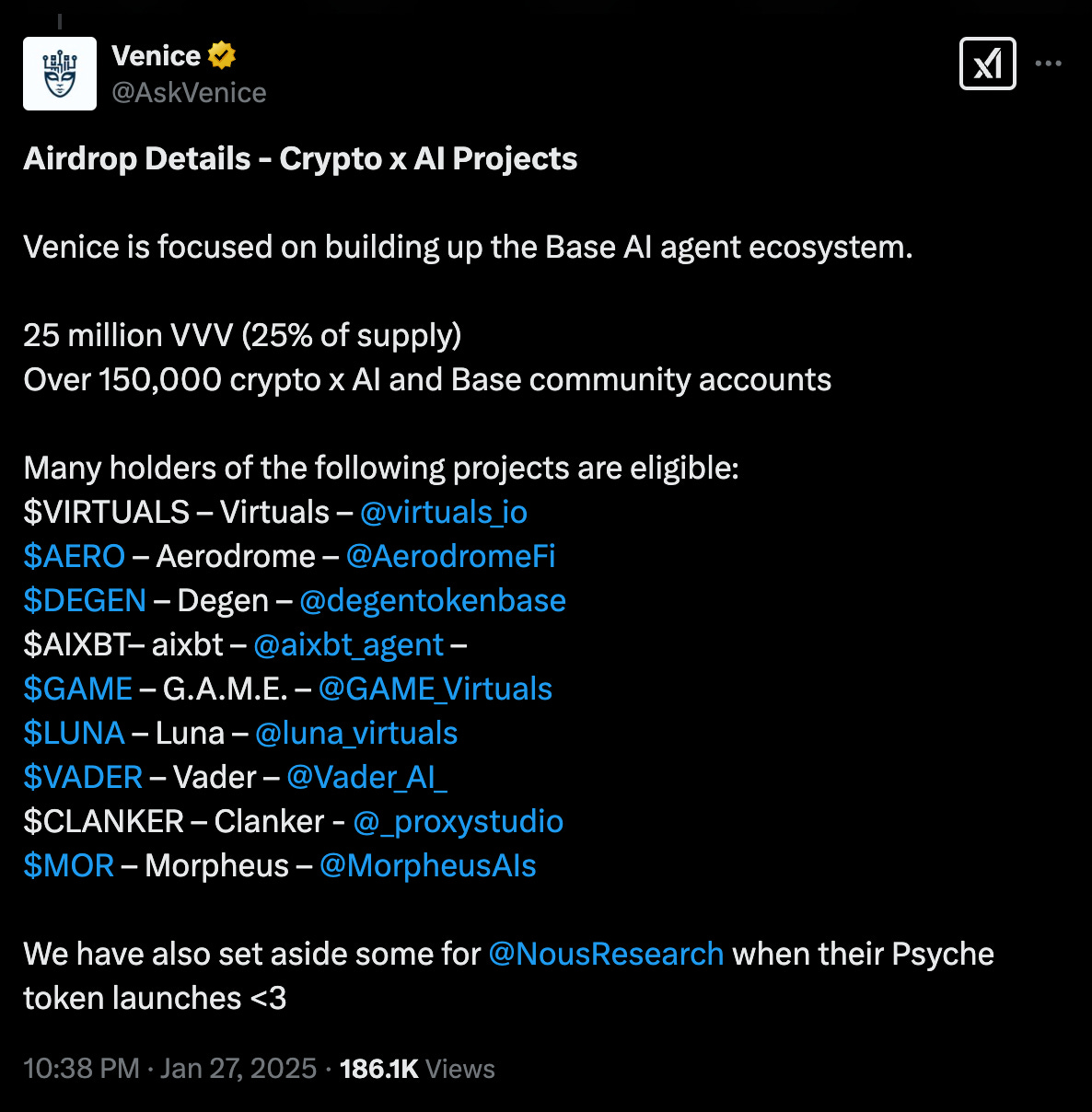

More than 40K holders jumped in, drawn by Venice's unique proposition: stake VVV tokens, get unlimited access to uncensored AI capabilities, including the coveted DeepSeek R-1 model that had just restricted global access.

Coinbase, in a rare move, listed the token on day one.

The crypto world had seen plenty of token pumps before. This one felt different – it was backed by a working product with real users.

Then came the whispers.

Two contributors from Aerodrome Finance, one of Venice's launch partners, were spotted making massive purchases shortly after the token went live – but before any public announcements.

Their $50,000 position ballooned to $1 million in under an hour.

The crypto community erupted.

Aerodrome quickly suspended the contributors, but the damage was done. VVV's price plunged 50% as accusations of insider trading spread across social media.

Despite the drama, Venice's actual technology and business model remained compelling.

The platform introduced a novel staking mechanism where VVV holders get proportional access to AI computing power.

Stake 1% of total VVV, get 1% of the platform's AI capacity – forever.

With 14 million new tokens minted annually (14% inflation), split between Venice and stakers based on API usage, early stakers could potentially earn more from providing AI services than they spend using them.

It's the kind of tokenomics that makes sense on paper. But as the insider trading scandal showed, even the best designs can be undermined by human nature.

Token Dispatch View 🔍

The battle for AI's future won't be fought in research labs or corporate boardrooms – it'll happen in the messy intersection of privacy, profit, and human nature. Venice AI's rollout captures this perfectly.

In one corner, we have a genuinely innovative attempt to democratise AI access through blockchain technology. In the other, we have the age-old story of insiders trying to game the system.

A bigger picture is emerging. This isn't just about another token or even another AI platform – it's about the first real battle between centralised and decentralised AI.

The timing feels almost scripted. Just as DeepSeek restricts global access and concerns about AI censorship reach fever pitch, Venice offers an escape hatch.

It's an escape hatch that raises uncomfortable questions.

First, there's the privacy paradox.

While Venice promises not to store user data, critics point out that we're largely taking their word for it. Without knowing which GPU providers are processing our requests, how can users be sure their sensitive information isn't being preserved somewhere?

Then there's the censorship question.

Venice's "abliterated" models might offer unrestricted responses.

They also open a Pandora's box of ethical concerns. If you remove all guardrails from AI, who's responsible when things go wrong?

The most intriguing aspect is what Venice represents for crypto's evolution. After years of DeFi protocols essentially copying traditional finance, here's something genuinely new: decentralised infrastructure for AI computation.

Looking ahead, Venice's success or failure could determine whether AI remains the domain of tech giants or becomes something more democratic. Erik Voorhees is betting on the latter, just as he did with crypto exchanges a decade ago.

But as the insider trading scandal reminds us, the road to decentralisation is rarely straight. For every step forward in technology, human nature seems to pull us two steps back.

Week That Was 📆

Saturday: Who Opened the ETF Floodgates? 🌊

Thursday: Mapping Crypto's Global Gold Rush 🌐

Wednesday: Is XRP Crashing BTC's Stockpile Party? 🎈

Tuesday: The DeepSeek Moment 🐳

Token Dispatch is a daily crypto newsletter handpicked and crafted with love by human bots. You can find all about us here 🙌

If you want to reach out to 200,000+ subscriber community of the Token Dispatch, you can explore the partnership opportunities with us.

Disclaimer: This newsletter contains sponsored content and affiliate links. All sponsored content is clearly marked. Opinions expressed by sponsors or in sponsored content are their own and do not necessarily reflect the views of this newsletter or its authors. We may receive compensation from featured products/services. Content is for informational purposes only, not financial advice. Trading crypto involves substantial risk - your capital is at risk. Do your own research.

How did you make the animation?

This is crazy! The whole idea of Venice AI and Erik Voorhees trying to take AI out of centralized control feels like a huge shift. I love how they’re really going all in on privacy and not tracking us like everyone else. But I gotta ask, do you think removing all the restrictions on these models could lead to more problems than solutions? Seems like it could get messy if not handled right.