DePAI: Why Robots + Crypto Actually Makes Sense

Physical AI is coming, and decentralisation might be the only way it scales…

Hello,

Lately, I’ve been thinking about how strange it is that AI can write poetry, pass law exams, and plan a wedding itinerary, but the moment it tries to do something in the physical world, like pick up a box or deliver a parcel, everything falls apart. It’s like we built these incredibly smart minds and then forgot to give them a body, an address, a wallet, or even a way to not bump into a wall.

Every YouTube demo I see has the same energy: a robot doing something impressive, followed by an engineer standing just out of frame, praying it doesn’t glitch on camera. For a technology that’s supposed to “change everything,” it still feels like it lives in a world that isn’t quite ready for it.

What I’ve realised is that robots don’t struggle because they lack intelligence, they struggle because the world around them has no infrastructure built for them. No reliable way to identify things, no shared map they can all trust, no consistent data layer, no way to move value or settle tasks without a human acting as the middleman. We built clever machines and then dropped them into a messy physical world with zero instructions.

And this is where crypto starts to make sense, not in the “put a token on it” way, but in the way blockchains already coordinate autonomously (smart contracts), verify data, and move value without asking anyone to trust anyone else. Physical AI needs exactly that. The models are smart, the hardware is improving, but the real world still lacks a shared coordination layer. And once you look closely, you realise every meaningful breakthrough in Physical AI depends on the infrastructure we haven’t built yet.

Before we talk about what DePAI is, it helps to look at what’s actually missing in the physical world today, why decentralisation fits into it so naturally, and what changes once machines can finally operate without us standing behind them.

The Suit-and-Tie Way to Buy Bitcoin.

If you want exposure to crypto but don’t want to touch a wallet, remember 12 words, or worry about rug pulls, Grayscale’s got you.

No private keys to manage

No unregulated exchanges

No steep learning curve

It’s the easiest way for individuals and institutions alike.

Here’s what’s really broken in the real world

When you look closely at how the robots operate outside controlled demos, you notice quickly that the physical world doesn’t provide the basic infrastructure autonomous systems need. Not because anyone deliberately designed it that way, but because the world was built around human abilities and assumptions.

These gaps become obvious when you look at what a robot actually needs to function.

1. High-precision location accuracy: Robots depend on centimeter-level accuracy to navigate safely. GPS is inconsistent outdoors and unusable indoors. Most factories, warehouses, farms, and ports don’t have uniform positioning systems, which forces companies to build expensive mapping setups before a robot can perform even simple tasks.

2. A common language for machines: One robot’s “box on shelf B” is another robot’s “object_142.png.” Every manufacturer uses different sensors, labels, formats, and verification methods. There’s no universal machine identity or common truth layer, which means robots can’t safely coordinate or even confirm what the other one is talking about.

3. Compute needs to be close, not far: Robots need millisecond-level inference to avoid collisions, adapt to moving objects, and make quick decisions. But most compute still lives in distant cloud regions where latency spikes whenever the network gets congested. That might be fine for websites or software applications, but it’s unacceptable for machines operating around humans or heavy machinery.

4. No native system for task settlement: Physical tasks involve verification, “did you actually pick this item?” or “did you deliver this part?”, and today this still requires a human. Even digital agents only started solving payment and coordination recently, with standards like x402, a machine-payments standard that lets AI agents pay for APIs and services on their own, without a human approving each transaction. In physical environments, there is no mechanism for machines to assign tasks, validate outcomes, or settle anything independently.

Put together, these issues show that the physical world doesn’t yet have the shared primitives required for autonomous systems to operate without human supervision. And once you recognise that, a bigger question appears, who is supposed to build those missing layers? That’s where the idea of DePAI comes in.

What the heck is DePAI?

DePAI is essentially an attempt to build the missing coordination and infrastructure layer that real-world robots don’t have today. If I had to explain DePAI to someone who has never touched AI or crypto in their life, I’d say this: it’s the idea that if you want machines to operate in the real world, they need a common set of rails, a shared identity system, location access, cheap commute nearby, and a way to pay for things without asking for human permission every few minutes.

And instead of one company owning all those rails, DePAI or Decentralised Physical AI simply asks: why not make this infrastructure open, so anyone can build on it and machines can actually talk to each other?

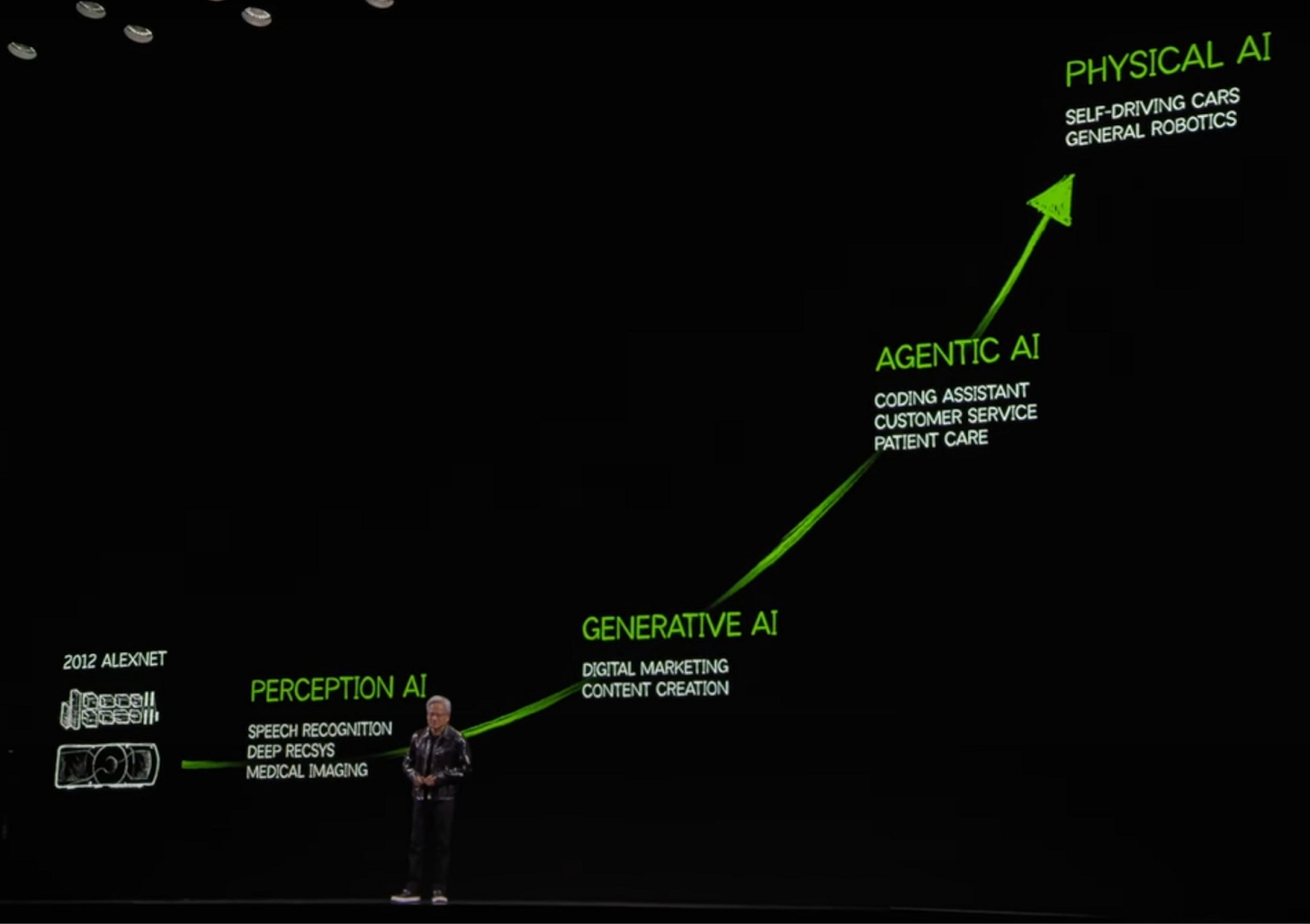

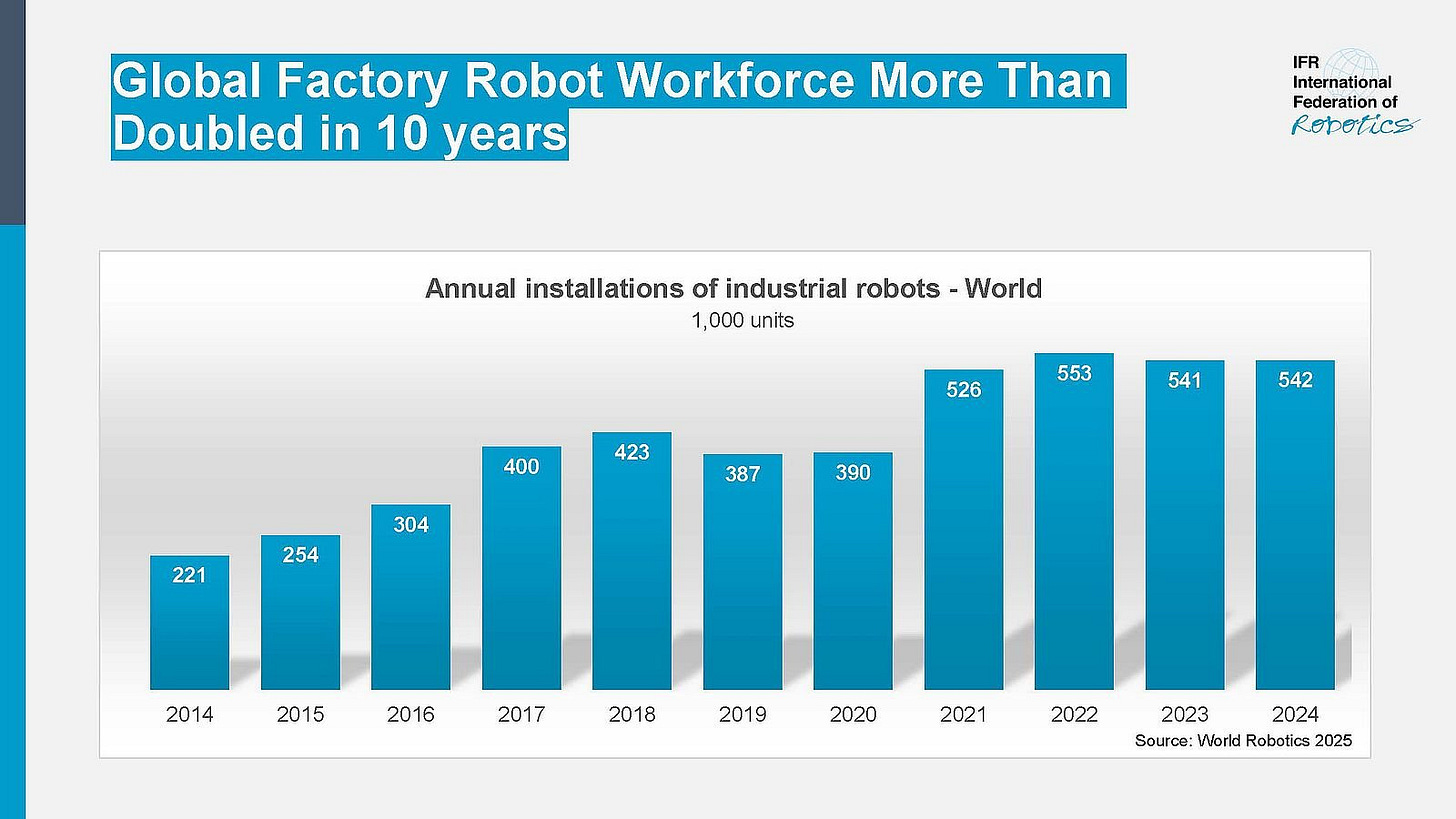

The reason people are suddenly using the word is because the demand for physical AI has suddenly accelerated.

Robotics company Figure AI have raised $675 million dollars to build general-purpose humanoids for warehouses. Another company, Apptronik secured $350 million to deploy bipedal robots across manufacturing floors. NVIDIA’s founder, Jensen Huang, recently said, “ The ChatGPT moment in the field of general robots is coming,” driven by industries that can’t hire humans fast enough to meet automation demand.

At the same time, the software side is evolving in the same direction, AI agents are beginning to behave like economic participants. We covered this trend in detail in our recent x402 piece, where AI agents pay for APIs and services on their own without a human approving each transaction. It sounds tiny, but it marks the first moment where software needed a native payment rail, the same way robots will soon need one in the physical world.

This is where everything starts to converge. The robots are becoming capable. The agents are becoming autonomous. But even with all this progress, the underlying world these systems rely on still isn’t built for them.

A robot can’t rely on Google’s location data once it steps inside a warehouse. It can’t depend on Amazon’s cloud if it needs real-time compute one meter away. It can’t swipe a Visa card to pay for a service. And it can’t use a proprietary map that gets updated differently by each manufacturer. Every company solves one slice of the puzzle, but none of them solve the full stack the machines actually need.

This makes it clear that the problem is the architecture. The stack is too spread out for one company to own end-to-end, which is why a different model is needed.

Why decentralisation fits better

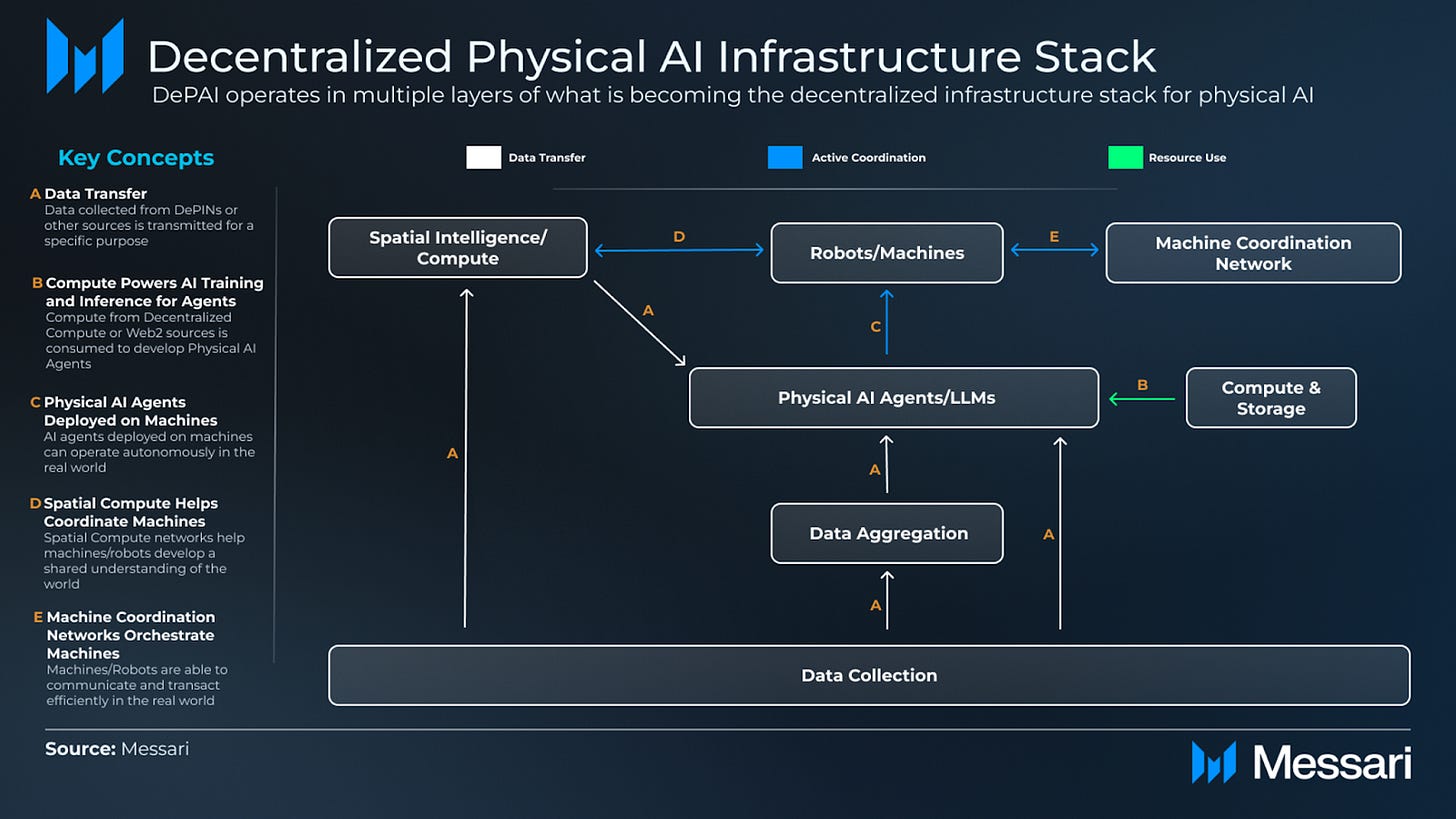

If you study the DePAI infrastructure stack, the first thing you notice is how much has to happen for a robot to function in the real world. Data doesn’t move in a straight line, it circulates between machines, sensors, spatial compute, aggregation layers, and coordination networks. Every one of these layers depends on the others, and none of them live in environments controlled by a single company. Which is why decentralisation naturally fits better here. You can see this clearly when you break down the different layers robots depend on.

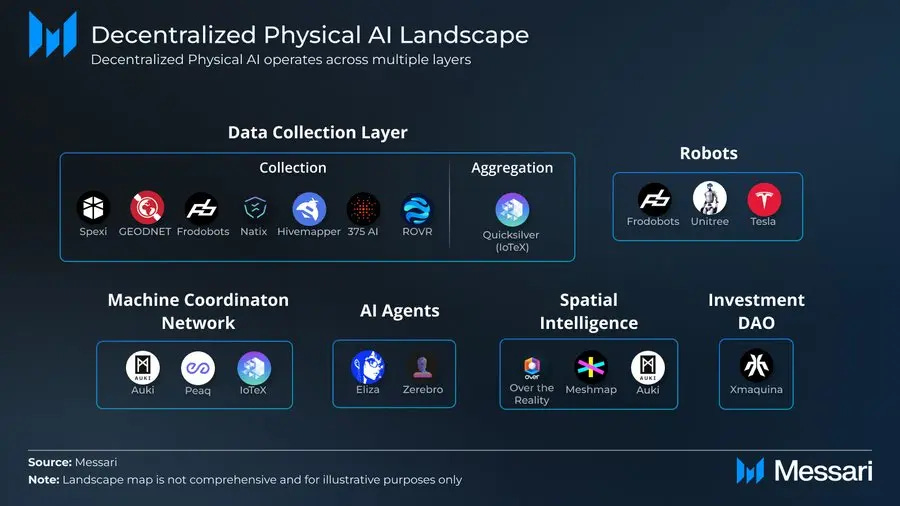

Here’s how the Decentralised Physical AI Infrastructure operates:

The bottom of the stack is the data collection layer. This is where robots gather video, sensor readings, teleoperation demonstrations, and real-world edge cases. Think of a delivery robot trying to operate on a busy street with uneven pavements, reflective surfaces, unexpected obstacles, strollers, wet patches, etc all of this becomes training data. If this layer doesn’t capture enough environment across roads, shops, farms, warehouses, and ports, the models trained above it will always be narrow. No single company has access to the full variety of real-world conditions Physical AI needs.

Above that is spatial intelligence and compute. This is the robot’s moment-to-moment understanding of the world: where objects are, how they’re moving, what’s safe, what’s not. Imagine a warehouse robot interpreting its surroundings - it needs compute close by, not in a data center thousands of kilometers away, because even a short delay can cause it to misjudge distance or speed. One cloud provider can’t realistically deploy low-latency compute nodes next to every factory, store, farm, and logistics hub. The physical world forces this layer to be distributed.

Above it sits machine coordination, the layer that lets robots share information, confirm each other’s actions, and collaborate on talks. In a warehouse, one robot might be picking items while another is navigating aisles. Today, robots from different manufacturers can’t coordinate because they don’t speak the same identity or verification language. A robot made by company A won’t trust a map update from company B, Without a shared coordination layer, multi-robot environments become siloed and inefficient.

When you zoom out from these layers, this market map fits into place. Each part of the map represents a different job in the Physical AI stack, and those jobs sit in completely different environments, handled by different companies.

And you can already see pieces of this stack taking shape in real deployments.

What is actually being build today?

A lot of early systems that are being used in the real world, especially in how machines gather data, understand space, and coordinate are starting to show up:

1. Real-world data collection: Frodobots is deploying low-cost delivery robots that capture human driving behavior, edge-cases obstacles, and local conditions while operating in different regions. This gives Physical AI access to real-environment data that simulations and controlled labs don’t capture.

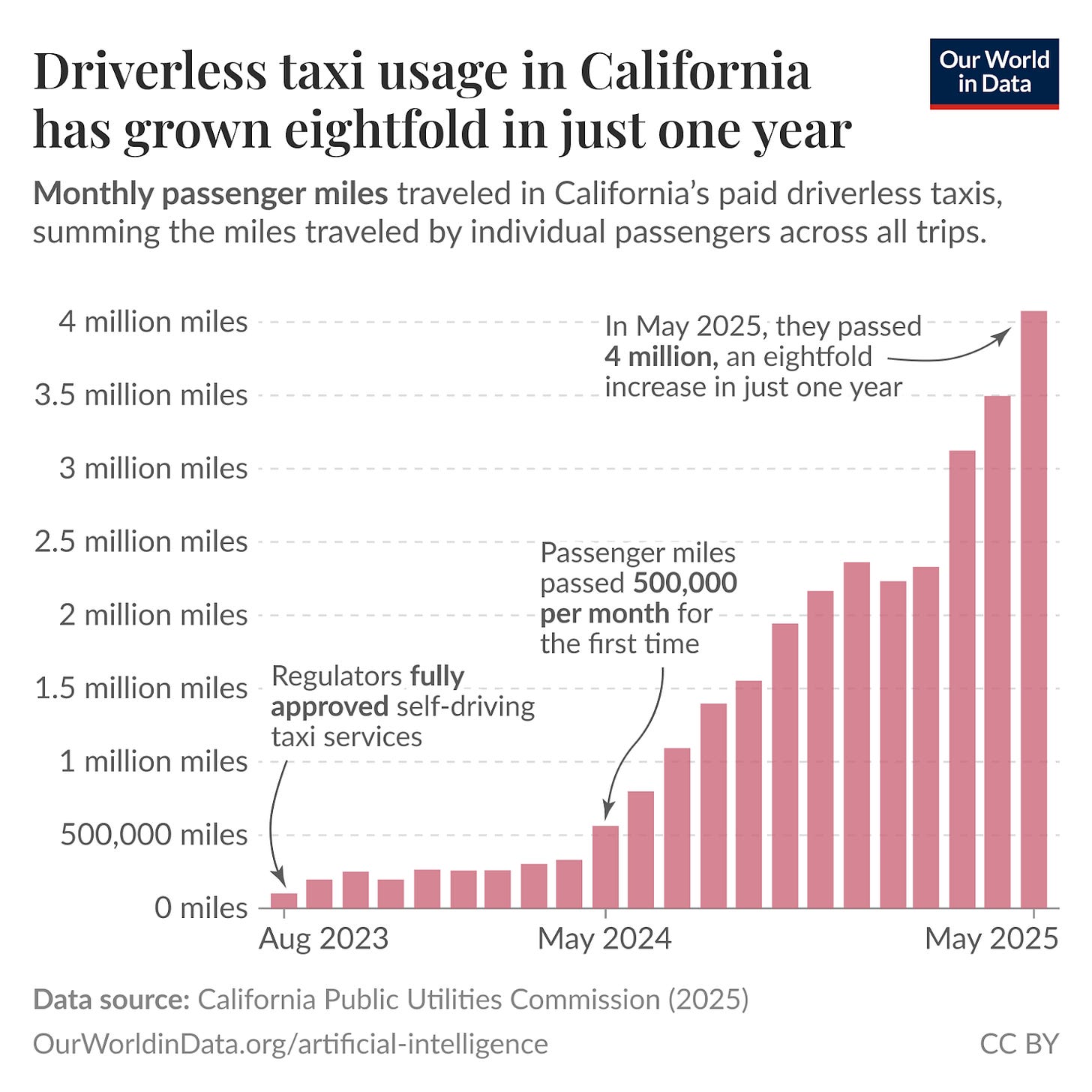

You can see something similar at a larger scale too. Driverless taxi usage in California grew eightfold in one year and cross 4+ million passenger miles this year. A reminder that autonomy scales fastest when systems learn from environments instead of simulations.

2. Continuous video mapping: Hivemapper’s dashcams and NATIX’s phone-camera network gather updated street-level video from thousands of contributors. This distributed coverage builds board perception datasets that would be too expensive for a single robotics company to mention.

3. SAM Physical AI agents: SAM is an early example of a robot that uses fleet-level visual data to understand location and context while acting autonomously. It’s still early, but it demonstrates how shared data improves machine capability.

4. Verified & structured datasets: Frameworks like IoTeX Quicksilver help turn raw footage and sensor readings into validated, uniform datasets. This matters because individual clips aren’t useful unless they’re standardised and checked before training.

All of these address different parts of the stack from data, mapping, aggregation, coordination and together, they give a real glimpse of what a functioning DPAI layer could look like.

My take, and where this actually goes

What stands out to me after After digging through all of this, is how the first real progress in Physical AI is likely to come from things that look basic on the surface - better data pipelines, better spatial understanding, and more reliable ways for machines to operate without constant supervision. These are the pieces that make everything else possible, and they’re the parts that are actually moving today.

I also don’t think this is a problem any single company can solve end-to-end. The environments robots operate in are too varied, and the stack has too many moving parts. Different teams will continue to build different pieces from hardware, data networks, mapping systems to compute layers, and those pieces will only be useful if they can work together instead if sitting in separate silos.

This shift won’t be quick or smooth. Real-world environments are messy, safety is non-negotiable, and the cost of deployment is still very high. But even with all that the direction feels clear enough now: as machines begin to rely on shared systems for data and coordination, it makes them more predictable and much easier to integrate into real environments.

The test now is whether those underlying layers settle into something stable enough for machines to depend on. If they do, Physical AI can move from on-stage demos to everyday use. If it doesn’t, everything stays fragmented and experimental.

That’s it for the day. See you next Sunday.

Until then … stay curious and DYOR,

Vaidik

Token Dispatch is a daily crypto newsletter handpicked and crafted with love by human bots. If you want to reach out to 200,000+ subscriber community of the Token Dispatch, you can explore the partnership opportunities with us 🙌

📩 Fill out this form to submit your details and book a meeting with us directly.

Disclaimer: This newsletter contains analysis and opinions of the author. Content is for informational purposes only, not financial advice. Trading crypto involves substantial risk - your capital is at risk. Do your own research.