Humans Verified, Bots Anonymous 🤖

The agent economy can't scale without identity infrastructure. Zero-knowledge proofs are the only way forward.

For most of human history, identity was simple. You were whoever the people around you said you were. Your face, your voice, your patterns of behavior, these were enough. Trust was local, reputation was earned through repeated interactions, and anonymity required genuine effort.

Then we built the internet, and identity became a problem we had to solve with infrastructure.

Now we’re building autonomous agents. Software that acts without us, and we’ve discovered that the internet’s entire trust model was designed for a world where software didn’t make decisions. It was built for dumb pipes carrying messages between humans, not for non-deterministic code that negotiates, transacts, and delegates authority at machine speed.

So we’re stuck with a philosophical inversion that would be funny if it weren’t so dangerous. To participate in digital life, humans now surrender their most immutable biological markers such as iris patterns, facial geometry, voice prints, just to prove they’re not bots. Meanwhile, the bots themselves reveal nothing. No credentials, no audit trails, no proof of who owns them or what they’re allowed to do. They glide through the infrastructure like ghosts, accumulating permissions and moving money, and the best we can do is hope they don’t hallucinate and delete a production database.

We’ve built the infrastructure backwards, and now we’re discovering that everything we want to do with AI agents is impossible until we fix it.

Play. Earn. Own. That’s Klink Finance.

With Klink Finance, you don’t just watch crypto, you act. Complete tasks, social quests, and partner offers to earn in crypto or cash. Build reputation, unlock rewards, and gain access to special drops.

Earn for gaming, app trials, surveys and more

Flexible withdrawals: crypto or fiat, your choice

Backed by a global user-base and trusted integration partners

If you’re ready to turn your time into value, join the earning revolution with Klink.

Somewhere right now, someone is holding their face very still in front of a glowing orb, scanning their iris into a database run by a company co-founded by Sam Altman. They’re submitting one of the most permanent pieces of biometric data a human possesses, all to prove they’re human. In a data center elsewhere, an AI agent is executing a $50,000 trade, and nobody has any idea who made it, who controls it, or whether it’s even allowed to do that.

Read: Worldcoin’s New Trust Economy 🌎

The asymmetry reveals that we’ve entered a phase transition in how trust works, and our old infrastructure can’t handle it. The internet was built for two kinds of entities: biological humans (who sleep, forget passwords, and bear legal liability) and deterministic machines (which execute predictable code under strict administrative control). It was fundamentally not designed for autonomous software that makes independent decisions but needs to be held accountable like a person.

This collision between autonomous capability and obsolete identity infrastructure is getting little crazy. Fine-tuned AI models are 22 times more likely to produce harmful outputs than base models. Jailbreak attacks succeed 90 to 100 percent of the time on production systems. When they do, 90 percent lead to sensitive data leaks. It takes an attacker about 42 seconds to jailbreak an AI agent, sometimes as little as four.

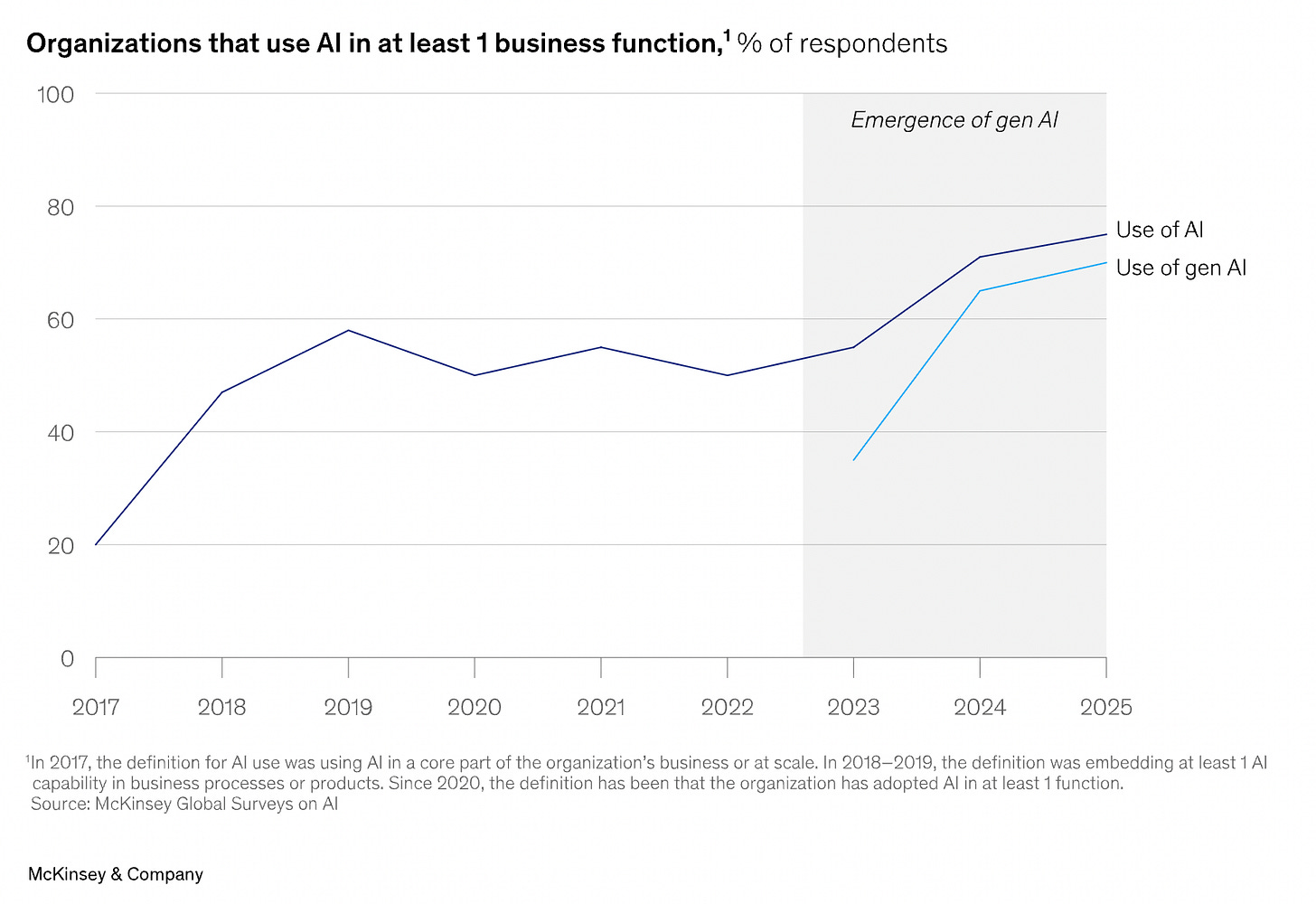

And yet enterprises keep trying to deploy these things. They want autonomous agents booking travel, negotiating contracts, executing trades. Analysts project the agent economy could add over $3 trillion in annual value globally, with AI contributing up to 7 percent of GDP growth by 2030. But 95 percent of AI pilots fail to reach production, and a lot of that failure comes down to a simple problem.

Nobody trusts these things enough to let them touch real systems.

The trust problem is about identity. When an agent interacts with your bank, your cloud infrastructure, your customer database, you need to know, Who controls this? What is it allowed to do? What happens if it breaks something?

But what does “identity” even mean for an AI agent? We’re not trying to identify the software itself. Code doesn’t bear legal responsibility. What we actually need to verify is the chain of accountability. Which human or organization deployed this agent, what authority did they grant it, and what constraints govern its behavior? An agent’s identity is the answer to three questions: who it represents, what it’s permitted to do, and who’s liable when it breaks something. The agent is just code.

Current systems have no good answers. AI agents get treated like “service accounts” with static API keys that live forever in environment variables. If someone steals the key, they can impersonate the agent indefinitely. There’s no session timeout, no rotation, no concept of “this credential should expire.” It’s the digital equivalent of handing a summer intern the CEO’s master key and unlimited corporate credit card, except the intern is a black box that occasionally hallucinates.

This is what security researchers call “excessive agency.” The agent needs access to everything it might need for unknown future tasks, so developers just grant broad permissions. Read/write on all repositories. Full Salesforce access. The works. Then the agent gets prompt-injected (someone tricks it into ignoring its instructions), and suddenly an attacker has god-mode access that looks completely legitimate because it is authenticated and authorised.

Traditional firewalls and access controls are architecturally blind to this. They were built for humans who log out, not autonomous software that runs forever. The traditional payment system which is built around a human cardholder, a merchant, and their respective banks assumes a person initiates every transaction. When AI agents start paying each other autonomously, the model collapses. Who’s the cardholder if software is spending money? How do you verify authorization without a human present? We have no way to verify the chain of authority when Agent A delegates to Agent B, which spins up Agent C to complete a task.

The human side of identity is also a disaster, just in the opposite direction. We’ve built a surveillance infrastructure that fails on every dimension. The average data breach now costs $4.44 million globally, $10.22 million in the US. India’s Aadhaar system, which holds biometric data for over a billion people, got breached in 2023. The leak included 815 million records with pregnancy status, religion, caste, bank details. In 2024, hackers threatened to leak the World-Check database, the KYC screening system used globally by banks. Even the verification infrastructure itself is compromised.

Iris patterns and fingerprints are permanent. Once they leak, they’re gone forever, and they can be weaponised by AI systems to convincingly spoof real people, defeating the very verification mechanisms designed to keep bots out. The more invasive the verification becomes, the greater the catastrophic potential when that data is compromised.

We’re heading toward a two-tier internet. One layer for verified humans and their verified agents, where transactions are smooth and trust is high. Another layer for everyone else, where aggressive rate limiting and CAPTCHAs make basic tasks miserable. The World ID model suggests we’re already there. Prove you’re human with your eyeballs, or get treated like a bot.

Zero-knowledge proofs offer a way out of this mess

The basic idea is that you can prove a fact without revealing the underlying data. A user can prove they’re over 21 without revealing their birthdate. An AI agent can prove it passed security audits without revealing vulnerabilities or intellectual property. A financial institution can verify a customer meets KYC requirements without storing all their personal information in a database that will eventually get breached.

The technology works. Privado ID lets you prove you’re an accredited investor without revealing your actual net worth. zkMe‘s system allows agents to prove compliance with Anti-Money Laundering rules without uploading transaction logs. Prove’s Verified Agent creates a cryptographic chain of custody for agentic commerce, potentially covering $1.7 trillion in transactions. Cheqd builds Trust Registries and payment rails so credential issuers get paid when agents verify themselves.

The verification happens cryptographically, in under 50 milliseconds, fast enough for real-time agent interactions.

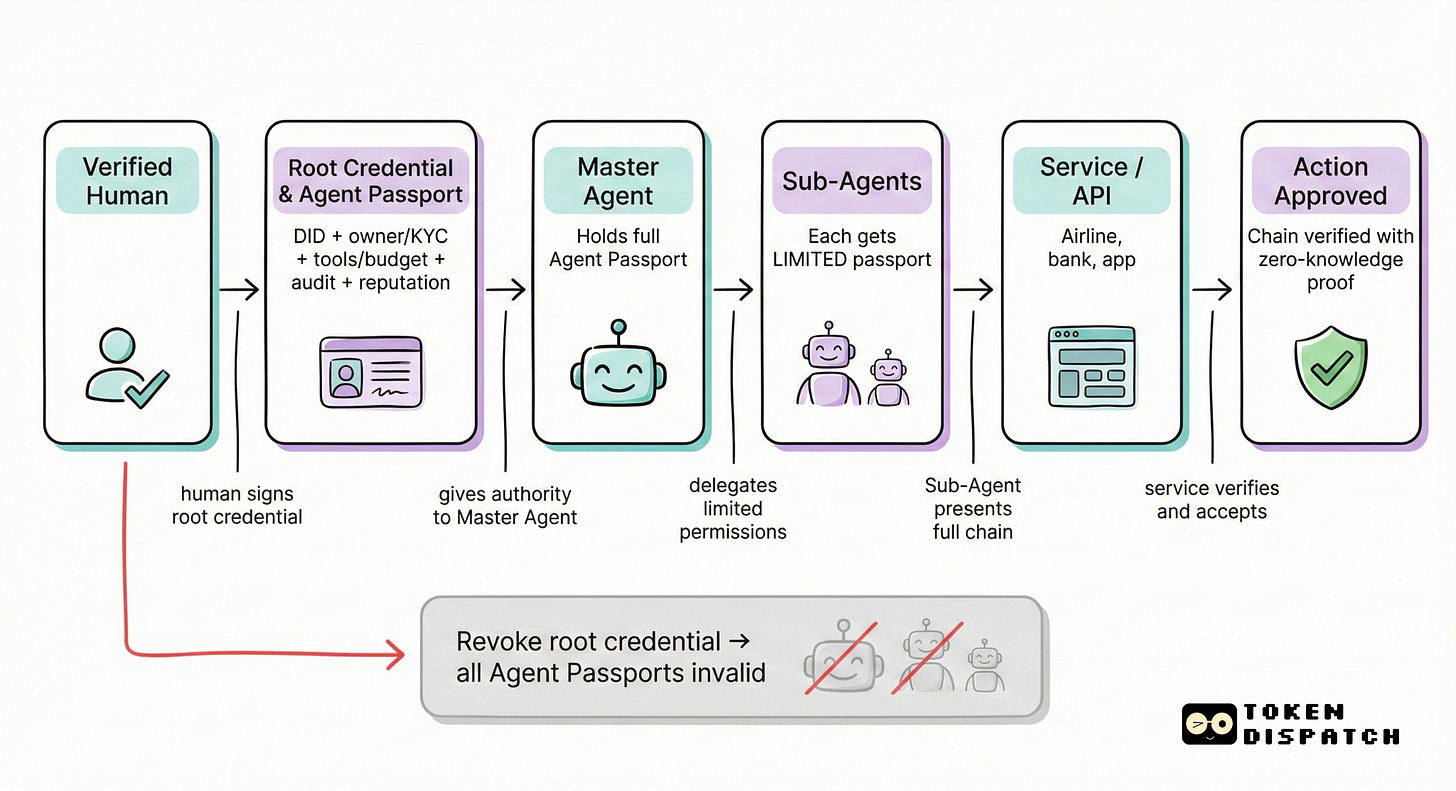

For AI agents specifically, zero-knowledge proofs unlock something called “Agent Passports.” A cryptographic bundle that travels with the agent, proving things about it without revealing everything.

The passport includes a Decentralised Identifier, a unique ID anchored on a blockchain that separates the agent’s identity from any single service provider. No more “this agent exists because OpenAI says so.” The agent has its own persistent identity.

Read: Passport Please

Then there are Verifiable Credentials, digitally signed attestations that act like stamps in a passport. A KYC credential proving the agent is owned by a legally verified human. A capability credential proving it’s authorised to use specific tools or spend a certain budget. An audit credential proving its code passed safety checks. A reputation log showing its past actions and success rates, so other agents can assess trustworthiness before transacting.

The security innovation here is the delegation chain. A human (verified through something like World ID or government KYC) signs a credential granting limited authority to their Master Agent. The Master Agent can then spin up specialised Sub-Agents and delegate subsets of its permissions to them. When a Sub-Agent interacts with, say, an airline booking system, it presents the entire chain. The airline verifies cryptographically: “This request comes from a Sub-Agent, authorised by a Master Agent, owned by a verified human user.”

If the user revokes the root credential, the entire chain invalidates instantly. The rogue agent problem goes away because authority is always traceable back to a human root, and that root can pull the plug.

This is how you solve the Amazon versus Perplexity dispute. In late 2025, Amazon issued a cease-and-desist to Perplexity AI over its “Comet” shopping agent, claiming it violated the Computer Fraud and Abuse Act by disguising itself as a Chrome browser and bypassing bot detection. Amazon argued this was covert scraping. Perplexity said Comet was just a user agent, a digital extension of the human user, and if a human has the right to shop on Amazon, their authorised agent should inherit that right.

There’s currently no standardised way for an agent to say, “I am a bot, but I am the user’s bot, I am authorised to spend $50, and I am here to buy, not scrape,” without giving the platform total surveillance power over the user’s credentials. Agent Passports fix this. The agent presents a zero-knowledge proof of its authorisation. Amazon can verify the proof without seeing the user’s identity, their spending history, or anything else. Trust without surveillance.

The economic stakes here are staggering.

Generative AI could add $2.6 trillion to $4.4 trillion annually to the global economy, but most of that value is locked behind trust barriers. Institutional investors won’t deploy capital into AI-driven trading strategies without robust KYC/AML compliance. Enterprises won’t let autonomous systems access critical infrastructure without verifiable identities. Regulators won’t approve AI in sensitive domains without accountability mechanisms.

Zero-knowledge identity addresses all three requirements without creating honeypots of personal data. Investors get compliance through selective disclosure that satisfies regulations without data transfer. Enterprises get cryptographic verification enabling trustless interactions between agents. Regulators get immutable audit trails that don’t expose sensitive data to public scrutiny.

The EU AI Act is already forcing this. High-risk AI systems need secure logging of decisions and human oversight mechanisms. Zero-knowledge proofs let agents cryptographically log their decision logic (I rejected this loan because the credit score was below 600) without revealing the private data (the actual credit score). This creates an audit trail that satisfies regulatory requirements without exposing user information.

If you’re wondering why this isn’t already happening everywhere, the answer is that surveillance capitalism is a very lucrative business model. Google, Facebook, and Amazon collectively spent over $97 million lobbying in 2020 to shape privacy legislation in their favor. Zero-knowledge identity fundamentally threatens this model. If users can prove things about themselves without handing over raw data, the extraction pipeline gets severed at the source.

Big tech companies formally objected to parts of the W3C Decentralised Identifier standard during ratification. The conflict is that these companies built empires on federated identity silos (Sign in with Apple, Google Sign-In) that lock users into their ecosystems. DIDs and zero-knowledge proofs allow users to carry identity and reputation outside those silos. If an agent can verify its owner’s identity using a DID and a ZK proof, it doesn’t need to go through Apple’s App Store or Google’s OAuth servers to establish trust.

This is a battle for the meta-layer of the web. Big tech wants identity to remain hub-and-spoke, where they sit in the middle. The Web3 and agentic movement wants it peer-to-peer, where users control their own credentials.

Despite resistance from incumbents, a new generation of identity companies is shipping zero-knowledge infrastructure.

The zero-knowledge proof market itself was valued at $1.28 billion in 2024 and is projected to reach $7.59 billion by 2033. The technology is moving from research papers to production at scale.

Global regulations are lining up behind this too. The EU AI Act prohibits certain biometric identification practices and requires transparency for high-risk systems. California’s Automated Decision-Making rules require risk assessments and human involvement. India’s Digital Personal Data Protection Rules mandate encryption, tokenization, and 72-hour breach notification. GDPR grants individuals rights over automated processing and demands data minimisation.

These frameworks create both compliance pressure and market opportunity for privacy-preserving systems. The companies building them are constructing the trust layer for an economy where humans and machines interact as verified entities, each proving what they need to prove, revealing nothing more.

Identity verification is not optional. Every functioning economy needs it. But what form does it take? Either we continue down the surveillance path, accumulating biometric databases that inevitably leak and enable authoritarian monitoring, or we build zero-knowledge systems where identity is decentralised, verification is cryptographic, and accountability doesn’t require data hoarding.

If AI agents are going to run significant portions of the economy, they need passports. Not the invasive kind that creates honeypots. Programmable passports that prove credentials through zero-knowledge proofs, anchor accountability on blockchains, and enable verification without exploitation.

The person with their face in the orb shouldn’t have to surrender their biometrics to prove they’re human. The trading agent moving $50,000 shouldn’t be able to do it anonymously. Both need verifiable identity, but one that doesn’t turn into a weapon the moment it leaks.

Humans over-verified, bots invisible. That world is ending. What comes next is either bigger surveillance databases or zero-knowledge proofs. The infrastructure exists. The standards are maturing. What remains is execution.

That’s it for today. Until next time, keep building.

Token Dispatch is a daily crypto newsletter handpicked and crafted with love by human bots. If you want to reach out to 200,000+ subscriber community of the Token Dispatch, you can explore the partnership opportunities with us 🙌

📩 Fill out this form to submit your details and book a meeting with us directly.

Disclaimer: This newsletter contains analysis and opinions of the author. Content is for informational purposes only, not financial advice. Trading crypto involves substantial risk - your capital is at risk. Do your own research.